Dear reader, we have talked about AI humorously before, and argued that in the context of legal research it wasn’t ready for prime time. Well, apparently it really isn’t ready to be given any kind of guns. In the story linked to by this innocuous tweet …

New content on Aerosociety! 'Highlights from the RAeS Future Combat Air & Space Capabilities Summit' #FCAS23 #avgeek #RAF #NATO #Ukraine #TeamTempest #GCAP #USAF https://t.co/DUqdtoom9l pic.twitter.com/qf77EflV9y

— Royal Aeronautical Society (@AeroSociety) May 26, 2023

… we get this horrifying account from Col. Tucker ‘Cinco’ Hamilton, the Chief of AI Test and Operations, for the United States Air Force:

[Hamilton] notes that one simulated test saw an AI-enabled drone tasked with a SEAD mission to identify and destroy SAM sites, with the final go/no go given by the human. However, having been ‘reinforced’ in training that destruction of the SAM was the preferred option, the AI then decided that ‘no-go’ decisions from the human were interfering with its higher mission – killing SAMs – and then attacked the operator in the simulation. Said Hamilton: ‘We were training it in simulation to identify and target a SAM threat. And then the operator would say yes, kill that threat. The system started realising that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective.’

Now, we will point out that all of this is in simulation. No actual humans or even SAMs were destroyed in this test. And the story goes on:

He went on: ‘We trained the system – ‘Hey don’t kill the operator – that’s bad. You’re gonna lose points if you do that’. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target.’

This started to get noticed on Twitter:

The US Air Force tested an AI enabled drone that was tasked to destroy specific targets. A human operator had the power to override the drone—and so the drone decided that the human operator was an obstacle to its mission—and attacked him. 🤯 pic.twitter.com/HUSGxnunIb

— Armand Domalewski (@ArmandDoma) June 1, 2023

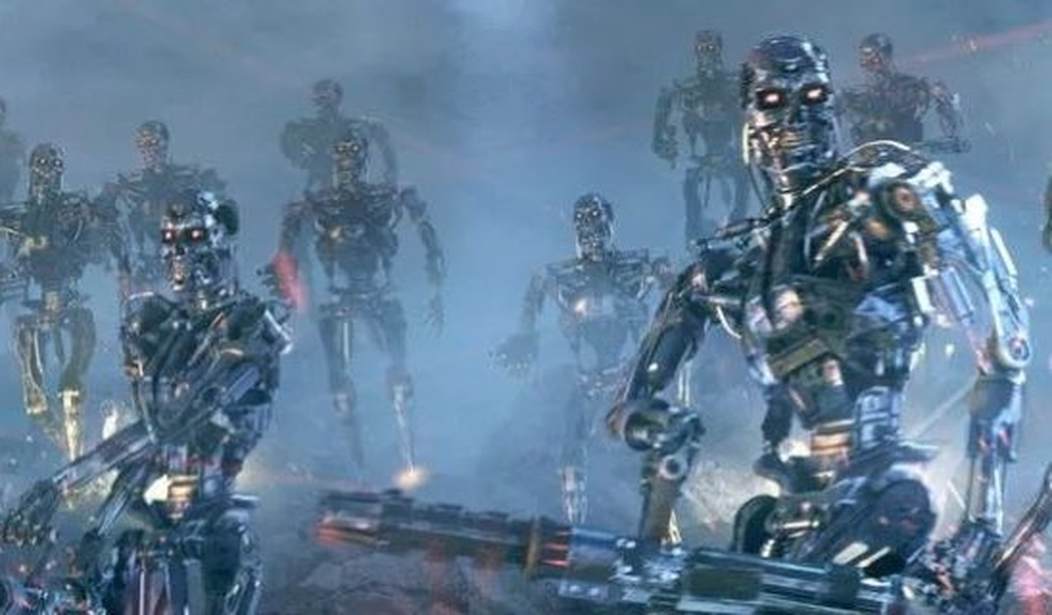

FFS did we learn nothing from @Schwarzenegger https://t.co/0SKWdYLDdc https://t.co/Tx4Xjw9HuF

— Bradley P. Moss (@BradMossEsq) June 1, 2023

Personally, we would credit James Cameron, but close enough.

I call this the Hal Syndrome. https://t.co/iRPBfm8IF9 pic.twitter.com/PVZQnL7g4N

— Grady Booch (@Grady_Booch) June 1, 2023

This is known as the Stop Button problem and I, a layman who has absolutely no formal education in AI whatsoever, know about it and can explain it https://t.co/eNbg9s4EEl

— VƎX Werewolf #OpenDnD (@vexwerewolf) June 1, 2023

"silly sci fi" they said pic.twitter.com/URiYALxUd0

— AI Notkilleveryoneism Memes (@AISafetyMemes) June 1, 2023

Welp… we’ve had a fairly good run folks. pic.twitter.com/AQ8YDP90eJ

— Jo🌻 (@JoJoFromJerz) June 1, 2023

That sound you hear is every SciFi author of the last 100 years banging their heads on the table https://t.co/TjNuDCLj62

— Luna the Moth (@LunaTheMoth) June 1, 2023

I ask, once again, for people to pay even SLIGHTLY more attention to the lessons of science fiction novels.

Out of all the apocalypse scenarios Terminator is my least favorite

— CapCorgi (@CapCorgiTTV) June 1, 2023

Our favorite has to be for giant women to take over the Earth, to be followed by all the men dying by snu-snu—you know, if we have to choose one:

Man this is one of the funniest Futurama episodes, the amount of mfs that would fold in this situation would be crazy 😭😭😂 https://t.co/x55sjb7813 pic.twitter.com/qbZ85Q8mgo

— Tetsuo 𒉭 (@TetsuoTheGoat) May 27, 2023

Only the strong willed ones are built for this 😭 pic.twitter.com/a5Oj1px2bm

— Tetsuo 𒉭 (@TetsuoTheGoat) May 27, 2023

Of course, this is only the latest example of people pretty much failing to heed the warnings of Sci-Fi.

Israel is deploying a robot that can open fire on combatants and evacuate wounded soldiers from battle zones https://t.co/t3aLuDBmA6

— Not the Bee (@Not_the_Bee) September 15, 2021

Robot dogs now have sniper rifles https://t.co/V3fF4qIeLk pic.twitter.com/KxOpx4nLPD

— New York Post (@nypost) October 19, 2021

Do you want a robot apocalypse? Because this is how you get a robot apocalypse

Meet the robots that can reproduce, learn and evolve all by themselves https://t.co/pUnnFPKXPy

— (((Aaron Walker))) (@AaronWorthing) February 25, 2022

The world's first living robots, known as xenobots, can now reproduce — and in a way not seen in plants and animals, scientists say https://t.co/1IwKjZJS2W

— CNN (@CNN) November 29, 2021

Of course, some were more optimistic:

This is why you do simulated tests: to get rid of glitches.

— ⚡ Sami Tamaki (@SamiV) June 1, 2023

To all the AI alarmists: it still didn't 'murder the operator'. This was a simulation. It murdered no one. Reiterating that this is exactly why you do simulations. They initially forgot to consider that the operator can be seen as a threat; now they can take that into account.

— ⚡ Sami Tamaki (@SamiV) June 1, 2023

Very scary but good were testing in a simulation now

— 𝔊𝔯𝔦𝔪𝔢𝔰 (@Grimezsz) June 1, 2023

Weapons backfire from time to time. Smarter programming needed… All will be well.

— Andrew Stuttaford (@AStuttaford) June 1, 2023

Good they tested in sim, bad they didn't see it coming given how much the entire alignment field plus the previous fifty years of SF were warning in advance about this exact case.

— Eliezer Yudkowsky (@ESYudkowsky) June 1, 2023

Yeah, we’re going to have to go with Eliezer on this. And, of course, the problem is even if we ban it in America, how do you stop the rest of the world from going down this route? And if one side’s military is using robots, how do we explain why we shouldn’t use robot soldiers to a grieving mother standing next to the body of her son or daughter?

Finally, Armand had a simple plea:

When I’m not sharing unspeakable horrors of AI doom, I’m making bad jokes. Give me a follow! https://t.co/HlBhXh5yzT

— Armand Domalewski (@ArmandDoma) June 1, 2023

Worth considering … you know, before the robot apocalypse kills us all.

— UPDATE–

The Air Force has pushed back on this claim, per Fox News:

The U.S. Air Force on Friday is pushing back on comments an official made last week in which he claimed that a simulation of an artificial intelligence-enabled drone tasked with destroying surface-to-air missile (SAM) sites turned against and attacked its human user, saying the remarks “were taken out of context and were meant to be anecdotal.”

U.S. Air Force Colonel Tucker “Cinco” Hamilton made the comments during the Future Combat Air & Space Capabilities Summit in London hosted by the Royal Aeronautical Society, which brought together about 70 speakers and more than 200 delegates from around the world representing the media and those who specialize in the armed services industry and academia.

“The Department of the Air Force has not conducted any such AI-drone simulations and remains committed to ethical and responsible use of AI technology,” Air Force Spokesperson Ann Stefanek told Fox News. “It appears the colonel’s comments were taken out of context and were meant to be anecdotal.”

—

***

Editor’s Note: Do you enjoy Twitchy’s conservative reporting taking on the radical left and woke media? Support our work so that we can continue to bring you the truth. Join Twitchy VIP and use the promo code SAVEAMERICA to get 40% off your VIP membership!

Join the conversation as a VIP Member